Share This Article

We’ve discussed several issues commonly faced by those moderating and serving as administrators for online communities. Issues like spam and general toxic behavior are common day-to-day issues for moderation teams. Today, despite the efforts of moderators and platform administrators, the task of curbing toxicity remains an ever-present challenge. In this follow-up, we will examine the evolving issues related to user-generated content and toxicity, explore potential solutions, and discuss how moderation strategies can adapt to new challenges presented by today’s digital landscape.

Modern issues with user-generated content and toxicity

As the digital space continues to evolve, the scope and nature of toxicity in online communities are also changing. One of the most concerning trends is the growth of polarizing discourse. Social media platforms such as X (formerly Twitter) and Facebook have become hotbeds for divisive debates, where extreme opinions often drown out more moderate voices. This phenomenon is exacerbated by organized campaigns such as brigading, where users from outside communities are directed to attack or overwhelm specific groups with harmful content or mass reporting.

At the same time, influencers, individuals who have amassed significant followings, can often stir up controversy for personal gain. This exacerbates the climate of toxicity as these figures may share misleading narratives or engage in online disputes that attract intense attention and spark further hostility.

While platforms like X and Facebook were once at the forefront of content moderation efforts, they have increasingly adopted a “bare minimum effort” approach in recent years, aligning their strategies with shifting political ideals and focusing on minimizing backlash rather than actively preventing harmful behavior. This shift has led to criticism, with many arguing that these platforms are allowing toxicity to fester in favor of fostering engagement at any cost.

Ways to reduce toxicity in online communities

Though managing toxicity in online communities is a difficult task, there are several proactive solutions that platforms and communities can implement to improve the environment for all users.

1. Leveraging AI in Content Moderation

While AI itself can be polarizing—its use can elicit extremely negative responses from some folks (given how modern AI is typically trained on user data)—it can still serve as a useful tool for moderation when used properly. Given the scale at which user-generated content is produced, AI can act as a valuable supplement to human moderators by helping flag potentially harmful content for review. AI should not replace human moderators but should function as an “extra pair of eyes” that can help catch offensive or inappropriate material early, reducing the burden on individual moderators.

2. Platform Permissions and User Restrictions

One effective way to reduce instances of harassment from new users is by tightening platform permissions. For example, requiring moderator approval for new users’ first few posts or limiting their ability to send direct messages can significantly reduce the potential for trolling or harassment. By introducing such barriers, communities can ensure that users are more likely to contribute in a positive way before gaining full privileges.

Further, implementing a tiered system for user participation, where users must prove their commitment to a community through time and constructive (or even monetary – paying to unlock features) contributions, can help minimize disruptive behavior from those who join only to target members with malicious intent.

3. Transparency and Community-Driven Moderation

Transparency in moderation is crucial for fostering trust between users and the platform. Being open about the rules, enforcement policies, and the process of reporting violations can help reduce confusion and dissatisfaction among users. Additionally, platforms should ensure that their moderator staff comes from the community they are moderating. Community members who are familiar with the culture and nuances of the space are more likely to make fair, informed decisions that align with the values of the community.

Equally important is ensuring that the rules are clear and well-publicized. Platforms must also adapt their moderation policies to address emerging issues, as new forms of harassment or toxicity can arise rapidly in response to societal shifts. This requires platforms to be agile, updating their rules and providing necessary context whenever issues evolve (as well as making users aware of those updates as they happen).

4. Segmenting Controversial Discussions

To prevent debates on sensitive or politically charged topics from derailing a community, it can be helpful to create separate spaces for such discussions. For example, political or controversial subjects could be confined to a special section of the platform or within premium-member areas, where users are more committed to the community and its rules. These sections could include stricter penalties for violating community guidelines, providing clearer expectations for behavior in these high-stakes discussions.

By segmenting controversial content into designated spaces, platforms can encourage more thoughtful discussions and prevent the toxic fallout from spilling over into the broader community, where it could alienate other users or encourage aggressive behavior.

Moderation Team

Creating a Moderation Team

When creating a moderation team, you need to make sure that you have enough moderators to support the amount of content being generated by users in your community and the amount of daily active users. If your community is smaller, you may be able to rely on crowdsourced moderation to control toxicity and spam, but as your community grows, you’ll need a dedicated team to monitor users. This is not a hard and fast rule, as you may also need more or less moderators based on the amount of toxicity your community experiences.

You also need to determine how you’d like to set up your moderators. You could have all of your moderators have the ability to moderate all content on the forum or you could also have moderators assigned to specific forums if you have several different types of content in your community or some forums have more traffic than others. Each way is valid; you just need to determine whether you want users more broadly looking at the community and moderating content, or if you want more dedicated moderators that solely focus on small sections.

When creating a moderation team, it is important to keep the safety of your moderators in mind because enforcement of rules often doesn’t make the enforcer the most popular user and this could lead to abuse. Give your moderators the choice to remain anonymous, giving them the ability to use a fake name or an avatar that doesn’t represent their real-life appearance.

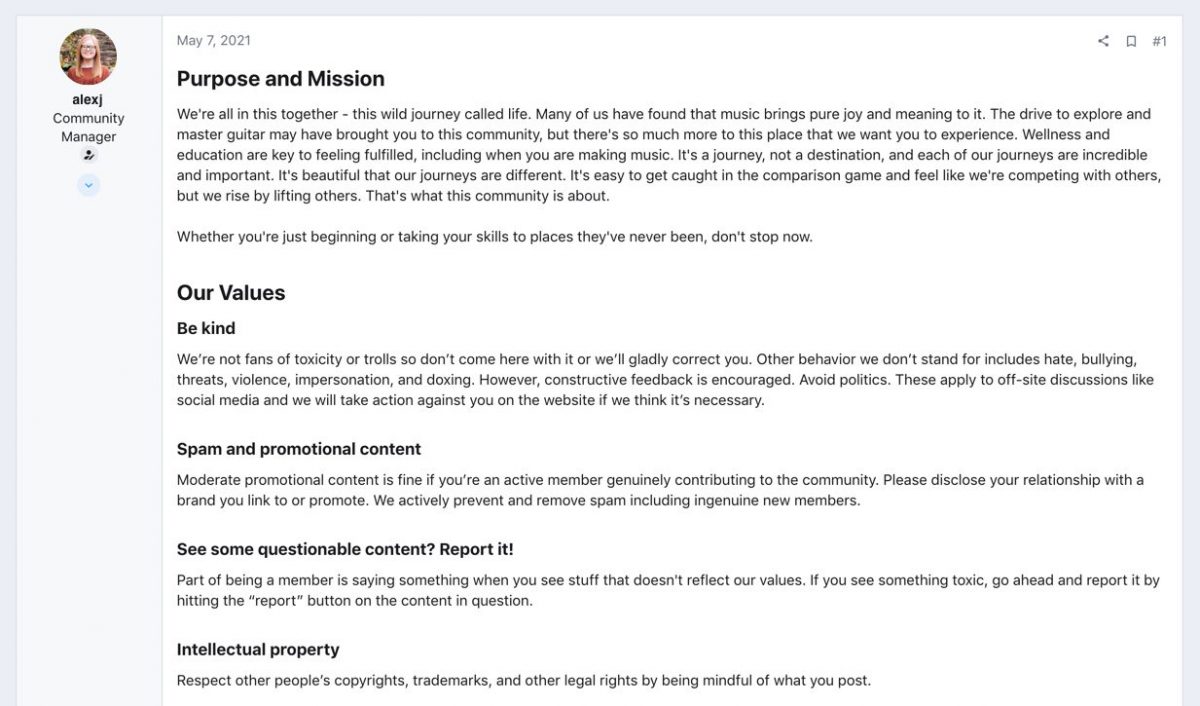

Forming Community Guidelines

As your community grows, having a strong set of guidelines will be imperative. These guidelines shouldn’t be overbearing or tyrannical; they should just be in place to protect users from negativity and guide the discussion and trajectory of the community. In your guidelines you should make it clear what content is acceptable, what content isn’t, and the resulting punishment of violation of the guidelines. This document should be short and simply worded so that users can read it quickly and be able to understand the rules they need to abide by.

We’ve included an example of community guidelines that should help you get a start on creating rules for your own community!

Conclusion

As we look forward, the challenges associated with online toxicity and content moderation remain persistent, with new issues continually emerging as digital platforms grow and change. The rise of polarizing content, outside brigading, and influencer-driven toxicity has complicated efforts to maintain safe and welcoming online environments. However, with the integration of AI, stricter user permissions, greater transparency in moderation, and the segmentation of controversial topics, there are multiple paths forward to tackle these issues head-on.

Ultimately, preventing toxicity in online communities requires a combination of technological innovation, thoughtful policy-making, and a commitment to community-driven values. As platforms continue to evolve, so too must the strategies for keeping them safe, inclusive, and conducive to productive discourse. The future of online spaces hinges on a collective effort to curb toxicity while upholding the principles behind the founding of the communities.