Share This Article

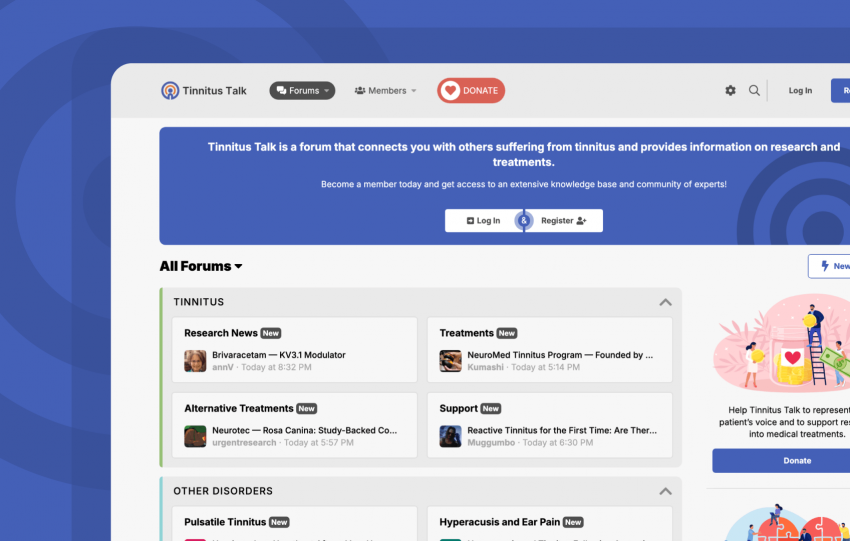

Across digital communities, keeping conversations healthy is an ongoing challenge. Spam floods discussions. Toxicity festers. And moderators often spend more time reacting than building up the spaces they care about.

We saw an opportunity to rethink that.

Veritas AI is our new multi-model moderation API built to reduce exposure to harmful content and give communities a smarter, faster, more thoughtful moderation workflow.

Why We Built It

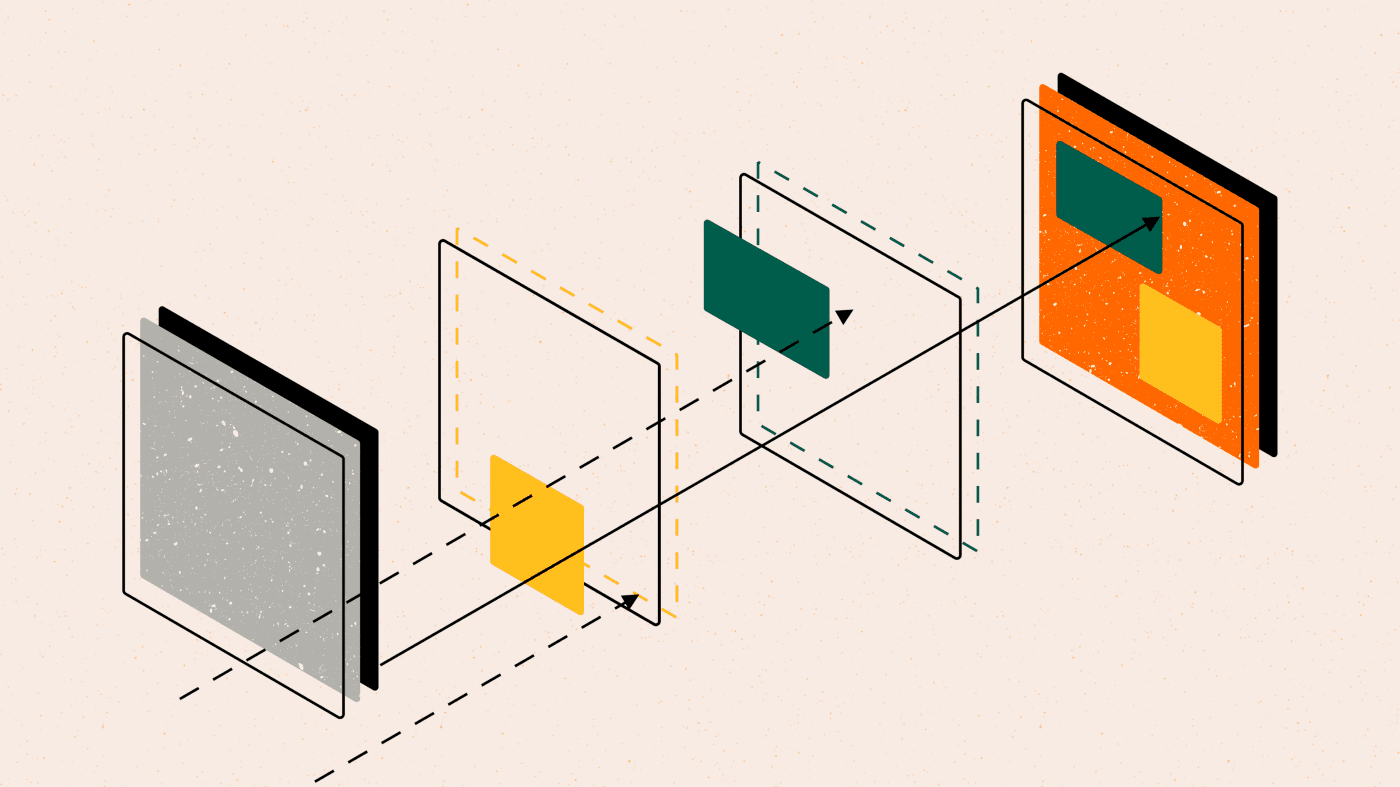

Most moderation tools rely on a single model to decide what’s toxic or spam. But real communities aren’t one-dimensional. And neither is content.

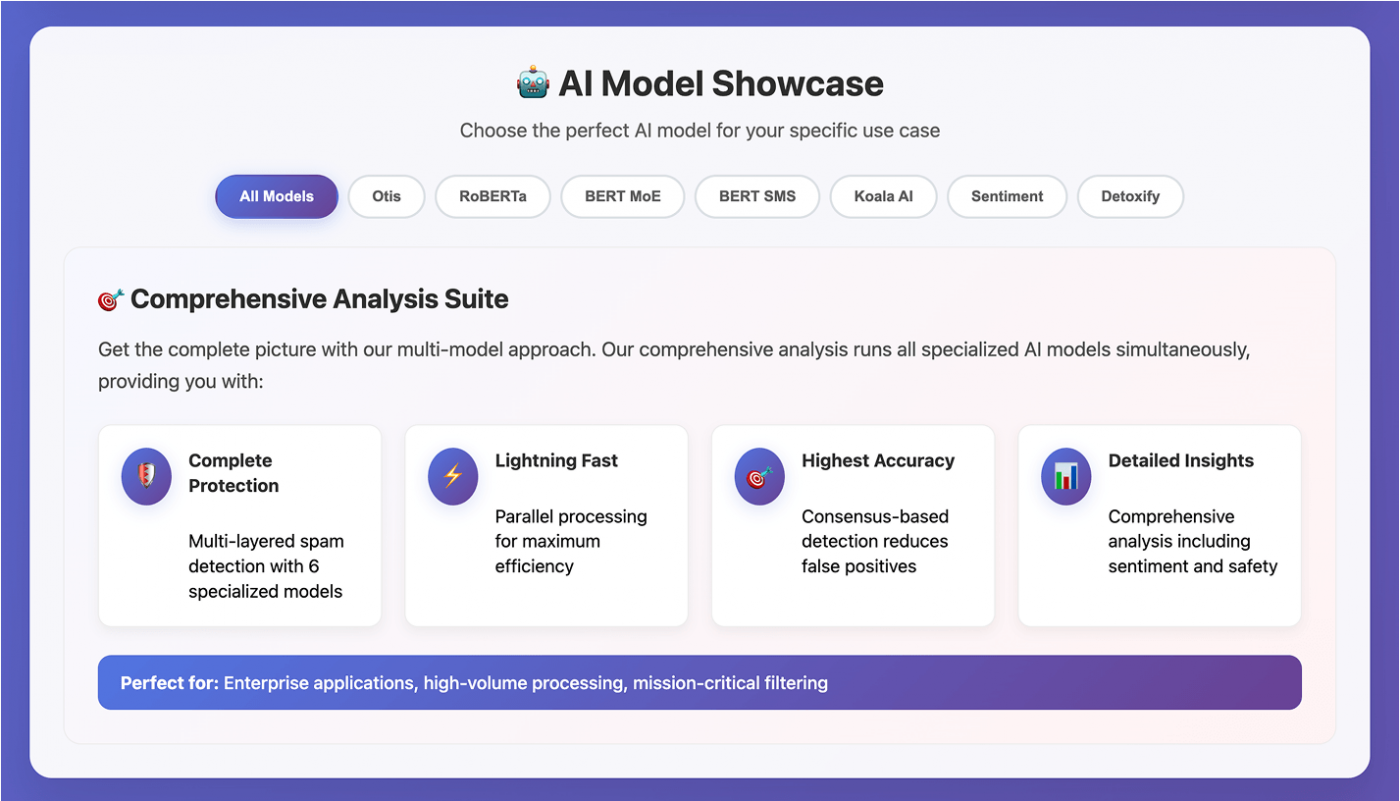

Veritas AI takes a different approach. Instead of one model calling the shots, it brings together a panel of expert systems, each trained to spot a specific type of harm: spam, toxicity, sentiment shifts, and more. Their combined analysis gives moderators not just a result, but a richer understanding of why content was flagged.

This lets you move beyond simple yes/no decisions and start moderating with more accuracy, nuance, and confidence.

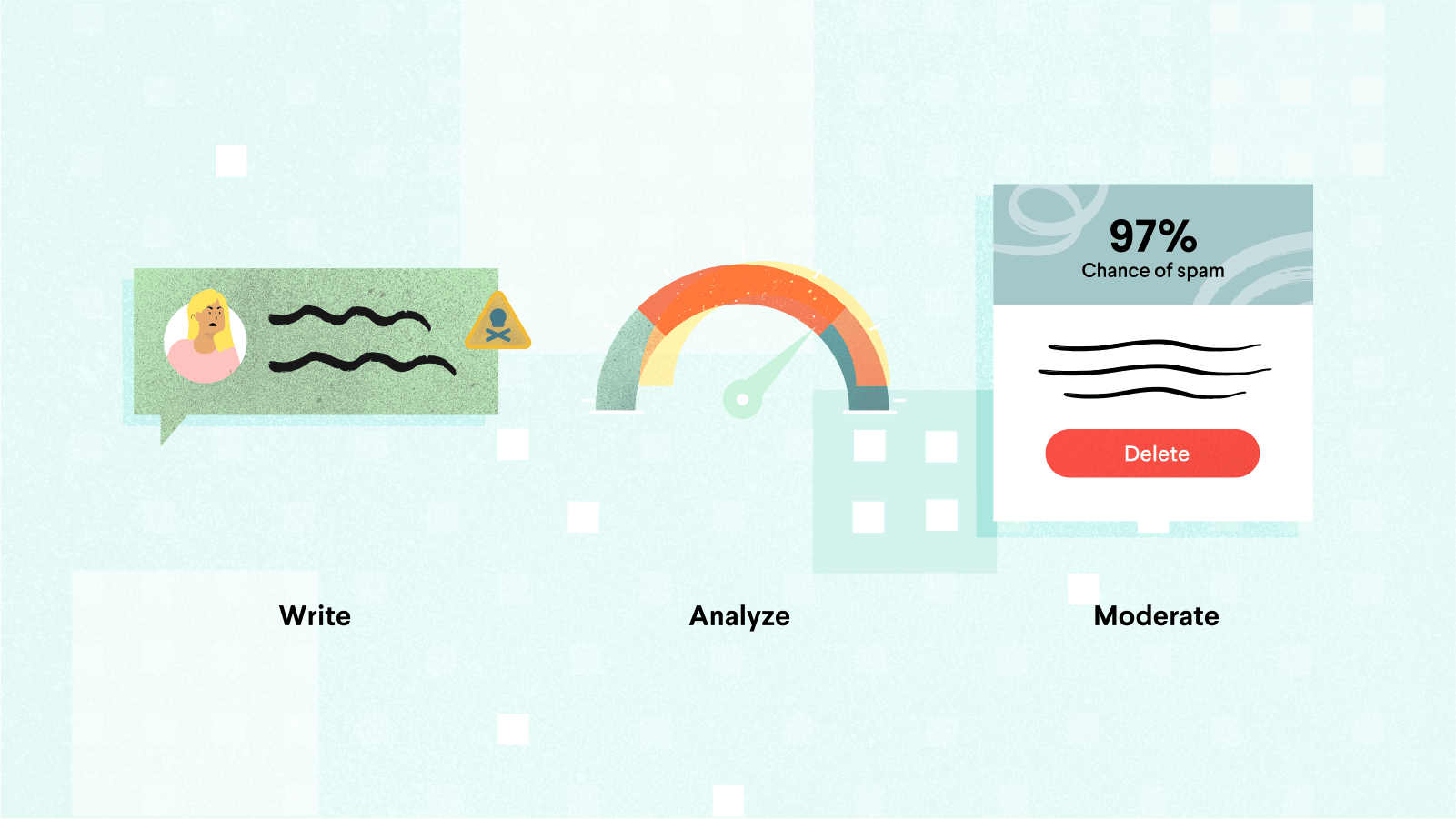

How It Works

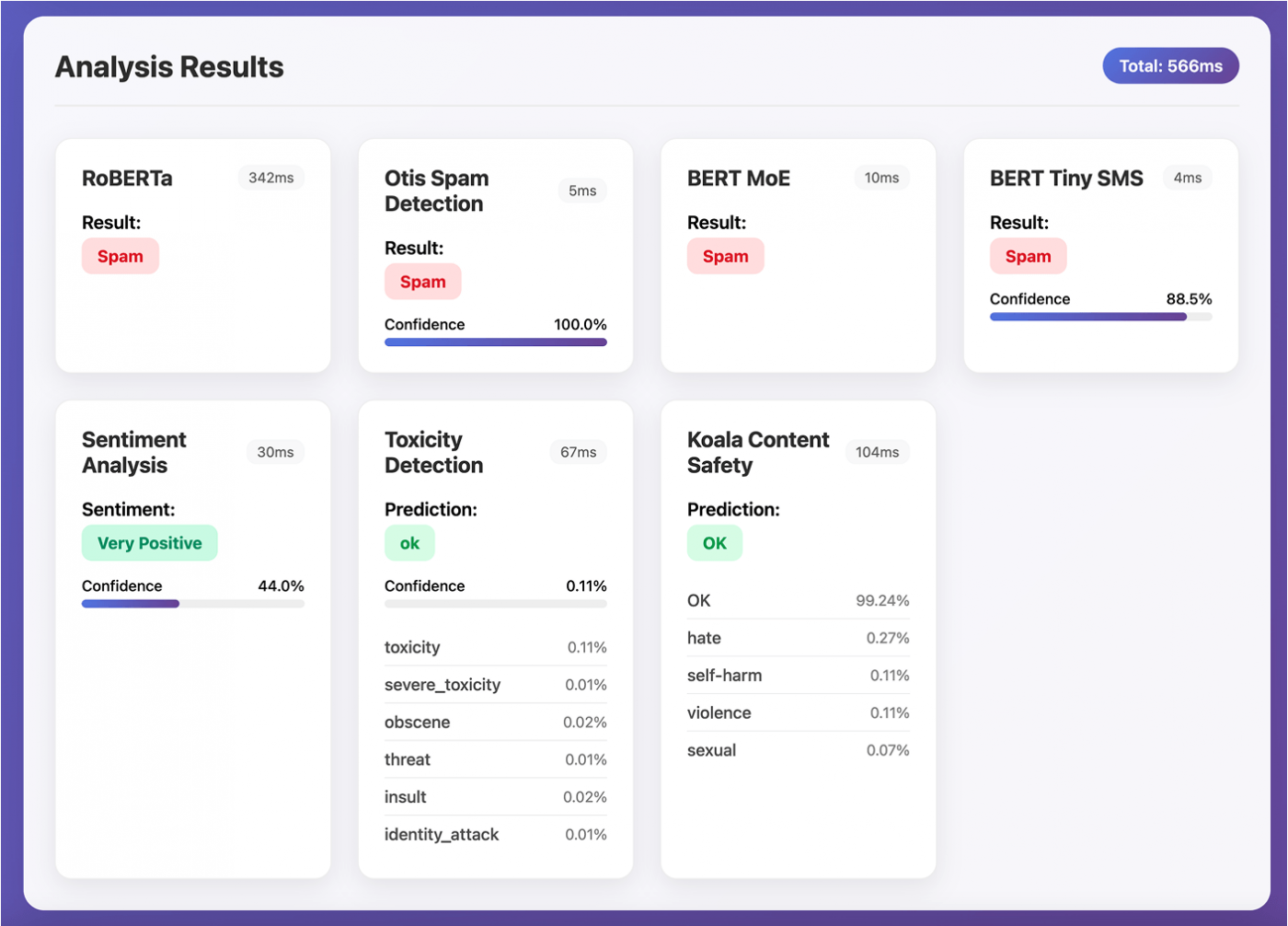

When a platform sends user-generated content to Veritas AI, the API distributes it across a collection of purpose-built models:

- Spam Detection: Otis, RoBERTa, BERT SMS, and others

- Toxicity + Safety: Detoxify, Koala AI

- Sentiment + Context: General-purpose sentiment engines

Each model brings a unique lens. The API consolidates their outputs into a single decision, paired with metadata that explains the rationale and shows confidence levels across each model.

What that means for your team:

- Higher Accuracy: Consensus-based decisions reduce both false positives and false negatives.

- Faster Response Times: Models run in parallel to deliver near-instant results.

- Deeper Insight: Every decision comes with traceable logic, not a black box.

What It Helps You Do

1. Reduce Exposure to Harmful Content

Content that would’ve stayed up for hours now gets flagged in seconds. Veritas AI gives your team the speed to act before things escalate.

2. Support (and Retain) Your Moderators

Instead of digging through post histories and alerts, moderators get surfaced reports, smart filters, and context-rich insights. It frees them up to spend more time engaging and building community—not just firefighting.

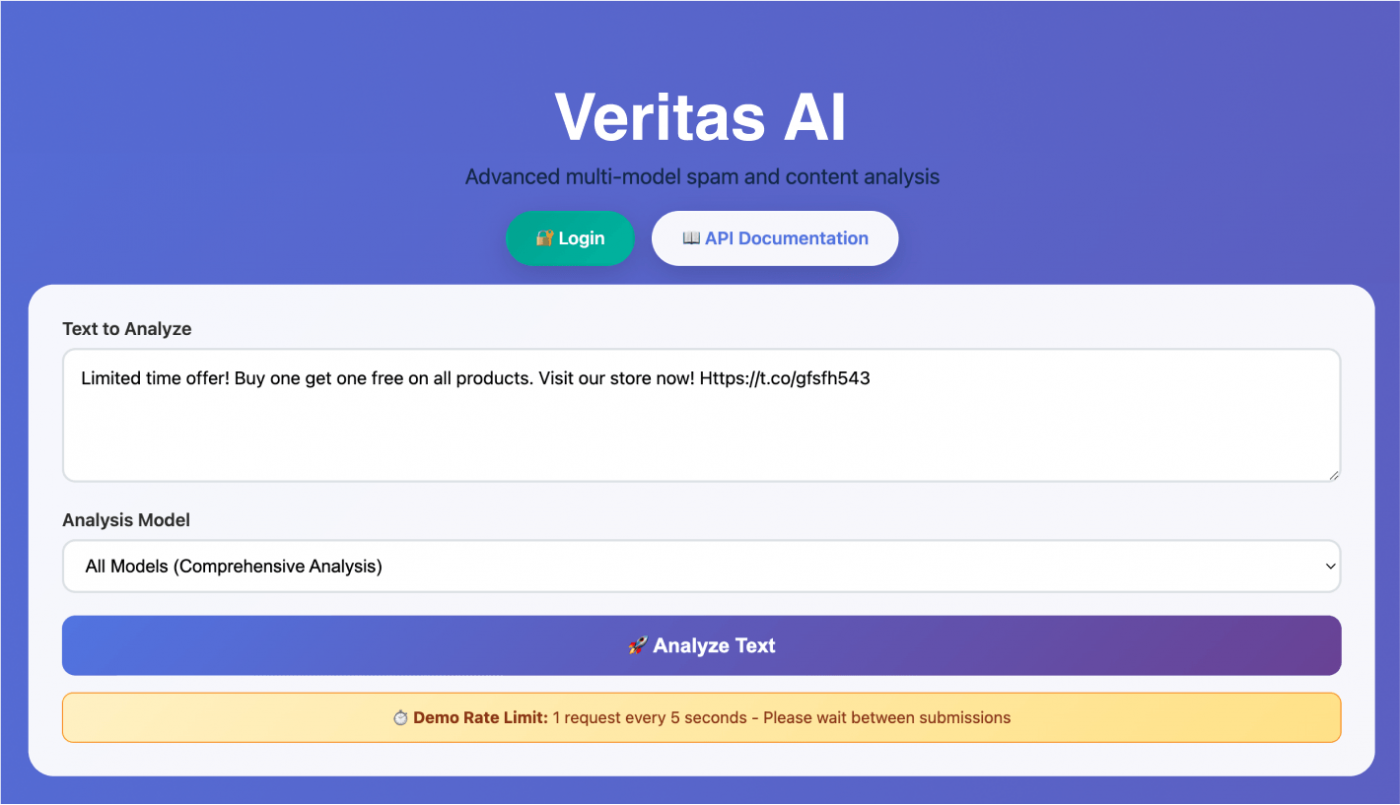

Try the Demo

We’ve built a working demo of the system so you can see it in action:

https://spam.audent.ai/

Drop in different kinds of content and compare how each model responds. It’s already helping us tune which model combinations work best across real-world communities.

Built for Any Platform

Veritas AI is a fully documented API, ready to connect with any custom stack or third-party platform. Forums, comment sections, live chats, if there’s user-generated content, Veritas AI can plug in.

And if you’re working with a system we don’t natively support yet, we’d love to hear from you. Integration is straightforward, and we’re here to help shape it to your needs.

What’s Next

We’re testing Veritas AI with early partners now, and refining the system based on real-world feedback. The full production-ready release is coming soon.

Long term, our goal is simple:

To make safer, smarter, more welcoming communities the norm, not the exception.