Share This Article

This year, the focus of online community management has evolved significantly to emphasize maintaining healthy and thriving digital spaces, especially those that offer a relief from the typical social media experience and refrain from over moderating their content. As communities grow, they often attract bots, spam users, and individuals who may unintentionally or intentionally contribute to a negative atmosphere through inappropriate content, bullying, or trolling. To address these challenges, it’s essential to implement robust content moderation strategies that balance safety, free expression, and community engagement.

Mitigating Spam

Advanced software tools have become integral in monitoring and preventing spam within online communities. These programs assist by flagging suspicious users or content, allowing moderators to take swift action. Identifying spam often involves recognizing fake email addresses, usernames filled with unrelated keywords, irrelevant links, and generic comments that don’t contribute to the discussion. Implementing measures such as CAPTCHA during registration and requiring email confirmations can further deter automated spam bots, ensuring that only genuine users gain access.

Monitoring Toxicity

Preventing toxicity is paramount to fostering a positive community environment. A dedicated moderation team, equipped with strong spam-catching systems, plays a crucial role in this endeavor. Not all negative interactions stem from external threats; sometimes, internal conflicts arise among users. Encouraging community members to report content that violates guidelines and promoting a collective responsibility to uphold community standards can significantly reduce toxic behavior. This proactive approach ensures that issues are addressed promptly, maintaining a welcoming atmosphere for all members.

The Role of AI in Moderation

Artificial Intelligence (AI) has become a cornerstone in modern content moderation. AI-powered tools efficiently filter harmful content, detecting nuanced hate speech, misinformation, and harmful behaviors in real-time. However, AI is not infallible; false positives and negatives can occur, highlighting the need for a hybrid approach that includes human oversight. Combining AI efficiency with human judgment allows for more accurate moderation, especially in complex cases where context is crucial. Transparency in AI moderation has also improved, with platforms providing clearer explanations for content removal and established appeals processes.

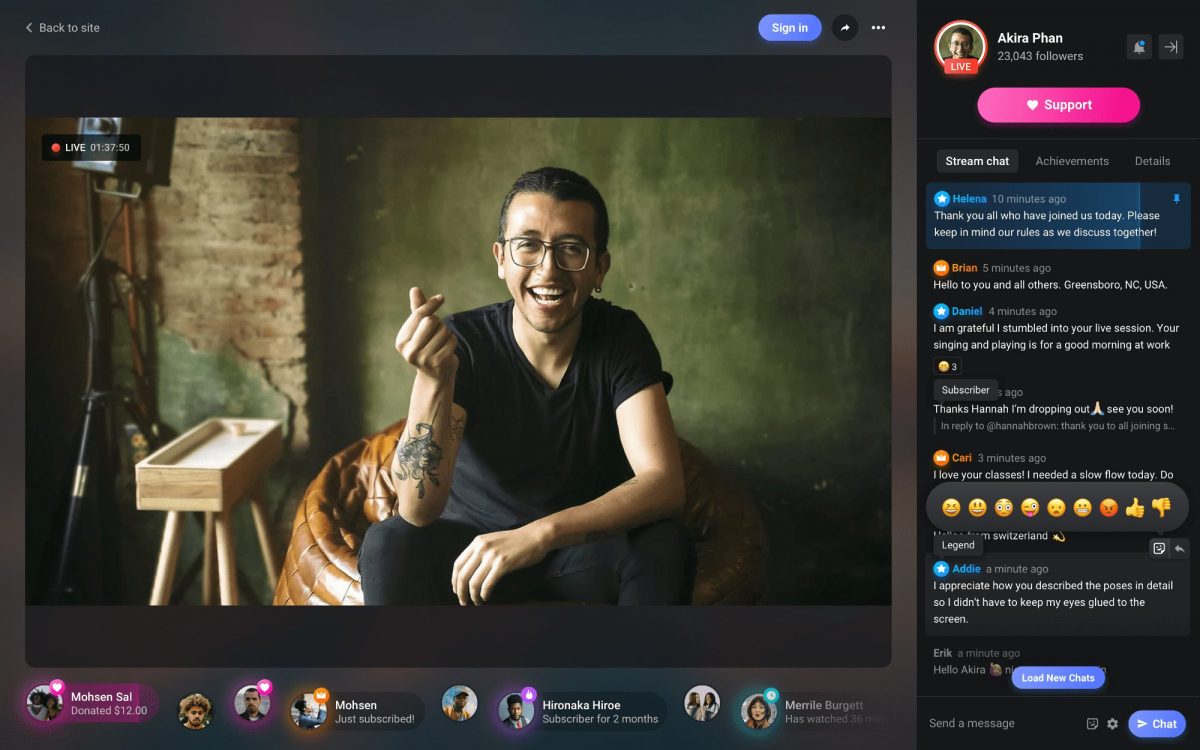

Decentralized and Community-Led Moderation

A notable trend in 2025 is the shift toward decentralized moderation. Many online communities now empower users to participate in governance, including community-led moderation boards and user-driven reporting systems. This approach enhances fairness and reduces bias, allowing independent communities to set their own rules and create diverse online spaces that cater to different needs. Such models promote a sense of ownership among members, leading to more engaged and self-regulating communities.

Balancing Free Speech and Safety

One of the ongoing challenges in content moderation is balancing free speech with the need for a safe online environment. In 2025, community guidelines have become more flexible, allowing for context-sensitive moderation rather than rigid enforcement. Many platforms have adopted tiered warning systems, temporary bans, and rehabilitation programs instead of permanent bans, aiming to educate users rather than simply punish them. Governments and regulators also play a role, requiring platforms to provide clear policies on content moderation while avoiding overreach that could stifle free expression. Transparency reports have become standard, giving users insight into how moderation decisions are made.

Looking Ahead

Content moderation in 2025 is more dynamic, transparent, and community-driven than ever before. While challenges remain, the continued evolution of AI, decentralized governance, and mental health-conscious approaches are shaping a more balanced digital ecosystem. As online interactions continue to grow, moderation strategies must adapt to ensure that online communities remain safe, engaging, and inclusive for all.