Share This Article

The goal of this project was to build an AI purchase assistant to help users determine which Apple products are worth the cost and compare specifications between products. We planned to deliver this resource in a chat “question and answer” format while training the bot on relevant content to maintain accuracy and give us control over tone.

What’s a Large Language Model (LLM)?

Large Language Models or LLMs can be thought of as extremely fuzzy data compression mechanisms where several terabytes of data are reduced to fractions of a percent of their original size. This results in an LLM, or “database,” that can reasonably reproduce the source material that was used to train it, but as a “paraphrase” or “general gist” instead of a perfect copy.

Considerations

Throughout the project, we needed to determine how to deliver a high quality product while also addressing shortcomings common in Large Language Models (LLMs):

- Staying up to date with the latest information

- Reducing or eliminating hallucinations to ensure accurate information

- Including unique data to train the LLM

- Assuming the topic of discussion with little or no direction from user

- Refusing to answer unrelated questions

Process

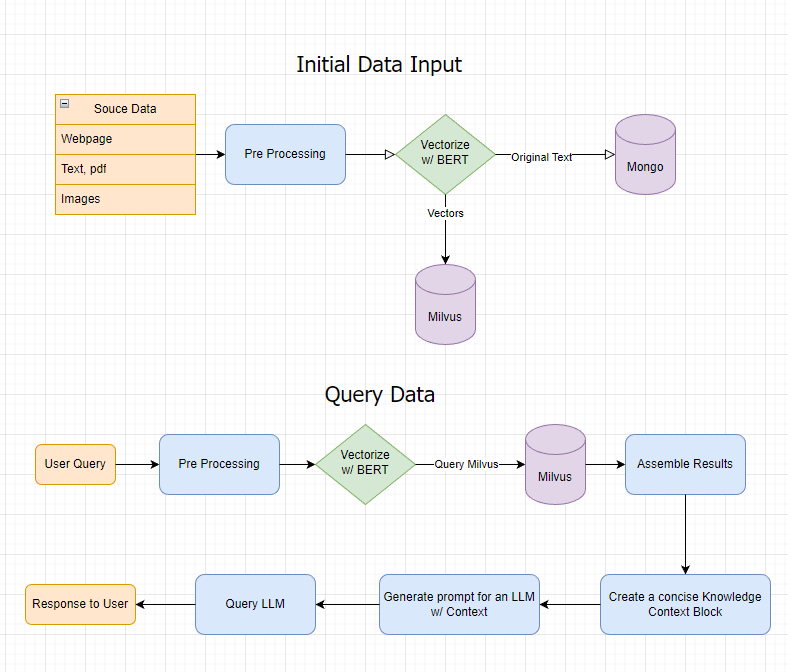

The overall technique to build and train an LLM is to use RAG, or “Retrieval Augmented Generation,” to enhance the base capabilities of the LLM by including missing information and overriding incorrect information present in the LLM. The following is a breakdown of the overall process to implement this approach.

The input process:

- Preprocessing data: Source material like web pages and documents are fed into a preprocessor to extract the relevant information. This can sometimes involve data conversions into a form more digestible by LLMs. For example a table such as:

| Animal | Speed |

| Cheetah | Fast |

| Turtle | Slow |

Should be converted into a list of facts that are “searchable”:

- Animal Cheetah is fast

- Animal Turtle is slow

- Vectorizing: The post-processed information then needs to be converted to a form that can more accurately be searched using natural language to obtain relevant context, which will be used later as a knowledge base for the LLM. This is accomplished by using an AI model called a “transformer,” which is similar to an LLM, to process chunks of text and return a list of “vectors.” These vectors are essentially how each block of text relates to another block. Used standalone, without an accompanying LLM, a vector search is a powerful replacement for keyword search.

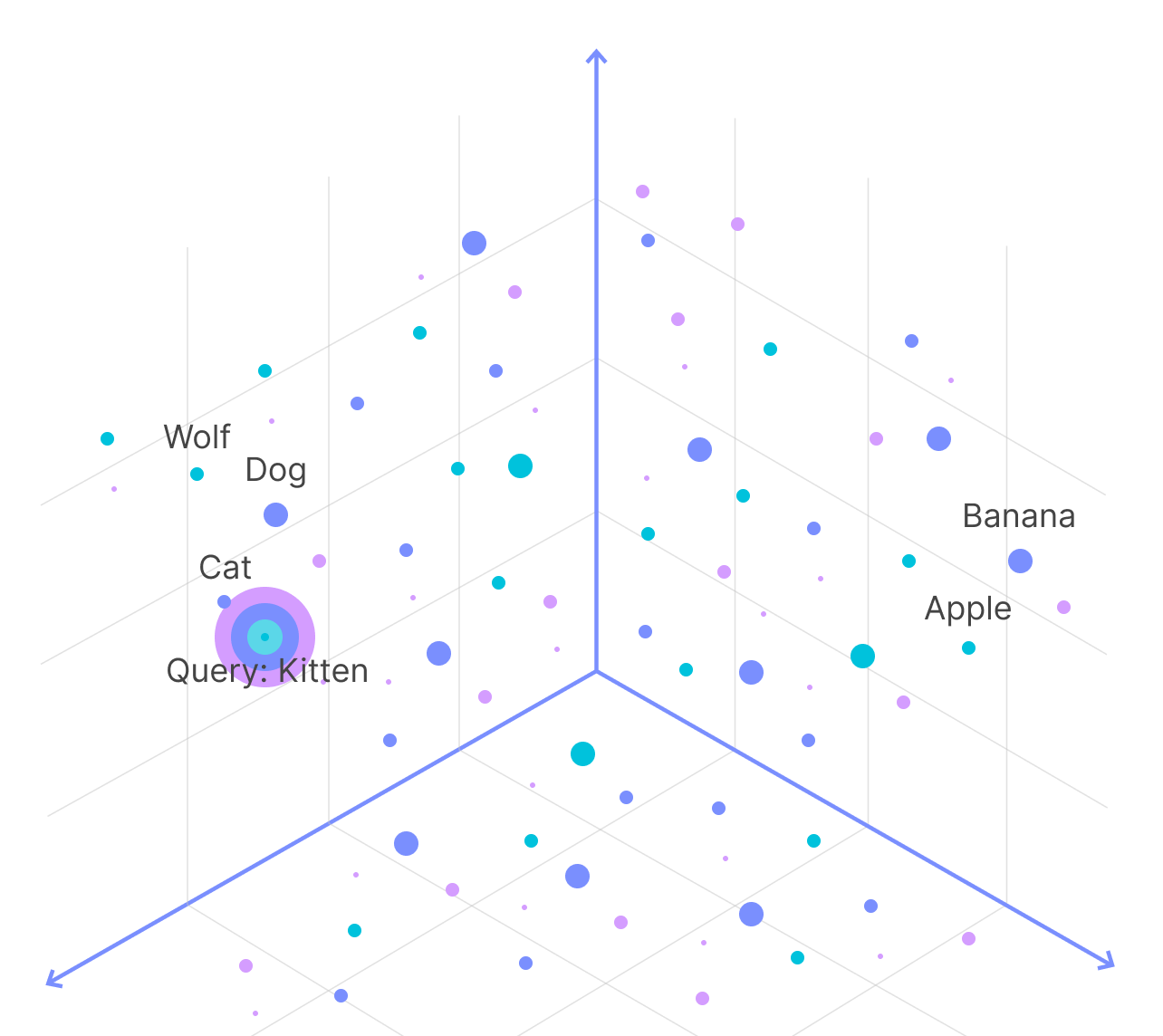

To visualize how this works, imagine only 3 dimensions, where a single word is assigned a position (vector):

In this visual representation of vectors, the word Dog is “closer” to Wolf than the word Banana, within the space. It can be said that they are “related.” Also note, the transformer AI model is responsible for assigning these vectors to the text. Now imagine not 3 dimensions, but thousands. This is a vector space in a nutshell.

- Storing information in Vector database: Once the vectors have been obtained, this is a type of database that specializes in storing and searching vectors specifically. As in answering the question “What top 5 vectors are nearest to vector X.”

- Storing information in MongoDB: We used Mongo as the storage in this example for the original non-vectorized text along with metadata such as user and source information. Basically anything that needs to be retained but is not a “vector.”

The query process:

- User query: This is the original text supplied by the user, such as “What is an M2 processor?”

- Pre processing: The query then needs to be filtered to remove things like special characters or unnecessary white space.

- Vectorizing: Similar to data processing, the query needs to be converted to vector form so that the vector database can be searched for similar entries.

- Assembling results: Once we have the results, the vector queries are combined and duplicate data is removed.

- Generating a prompt: An LLM prompt is generated using the vector search results and the processed user query. A simple example would be: “Given this [information], answer this [question].

- Query LLM: The inherent ability of the LLM to process text and reason is applied to the assembled data.

- Response To User: A response from LLM is sent back to the user.

Our AI purchase assistant in action

We then tested our custom bot on the latest information for Apple products and compared its responses to chatGPT, currently considered the “gold standard” in accuracy and reliability.

Question: “What’s going to be new on the next iPad air?”

ChatGPT: “I dont’ have the latest information on the upcoming iPad Air models or their features. For the most accurate and up-to-dae details on the next iPad Air and its new features, I recommend checking Apple’s official announcements, press releases, or their website. Apple typically reveals information about new products during special events or updates their website. Keep an eye on Apple’s communication channels for the latest information about the next iPad Air.”

Our AI solution: “It is stated that a new iPad Air is expected to be introduced in March 2024. Additionally the new iPad Air models are expected to feature OLED displays, an M2 chip, and a redesigned Magic Keyboard accessory”

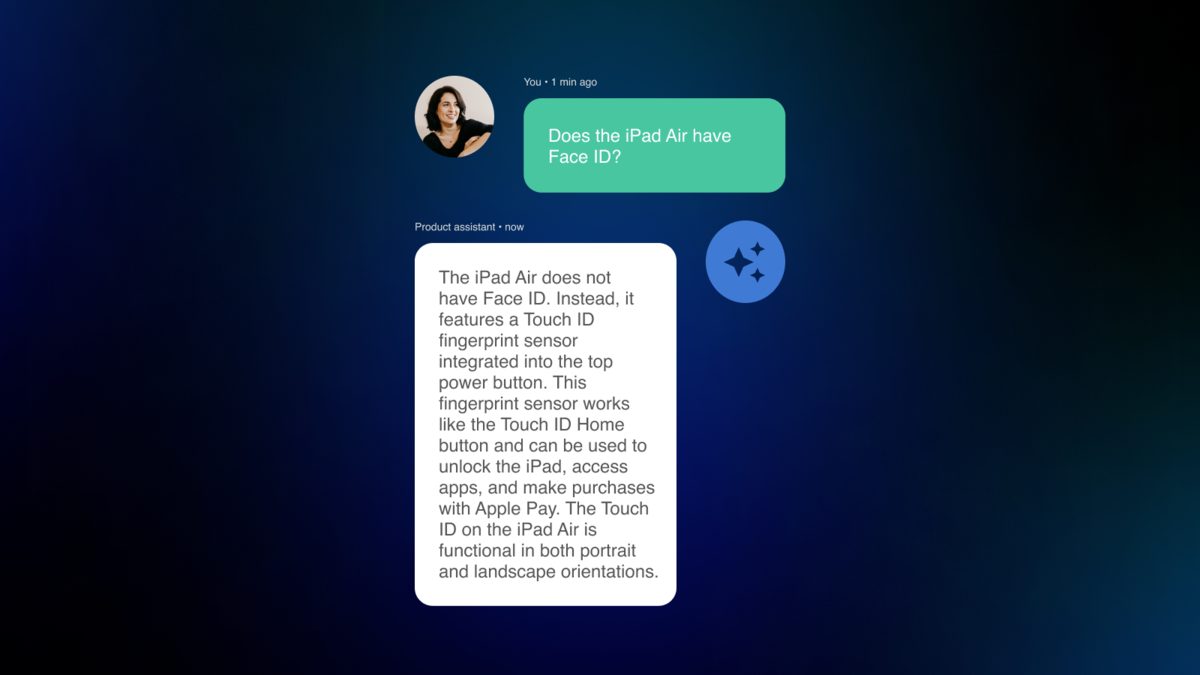

Question: “Does the iPad Air have Face ID?”

ChatGPT: “As of my last knowledge update in January 2022, the iPad Air (4th generation) does indeed feature Face ID. Face ID is a facial recognition technology developed by Apple, allowing users to unlock their device, make secure purchases, and use various apps by simply looking at the iPad’s front-facing TrueDepth camera.

Please note that device features can change with new releases or updates, so it’s a good idea to check the latest specifications on Apple’s official website or contact Apple support for the most current information on iPad Air features.”

Our AI solution: “No, the iPad Air does not have Face ID. It instead features Touch ID, which is a fingerprint scanner embedded in the iPad’s top button”

Question: “Best deal on the iPad Air”

ChatGPT: “I don’t have real-time internet access to check current deals”

Our AI solution: “The best deal on the iPad Air is currently available on Amazon for 499.00 down from 599.00.”

Question: “What do reviews think about the iPad Air?”

ChatGPT: “I don’t have real-time access to current reviews, but as of my last knowledge update in January 2022, the iPad Air has generally received positive reviews. Critics and users often praise its powerful performance, slim design, and compatibility with the Apple Pencil and Magic Keyboard. The device is known for its balance between features and price, making it an attractive option for many users.”

Our AI solution: “The reviews generally conclude that the iPad Air is a great value for the money, with performance on par with the previous iPad Pro thanks to the M1 chip and other Pro-like features. However, it is also pointed out that iPadOS makes it challenging to take full advantage of the performance capabilities.”

Question: “Who was President of The United States in 1950?”

ChatGPT: “In 1950, the President of the United States was Harry S. Truman. He served as the 33rd President from April 12, 1945 to January 20, 1953.”

Our AI solution: “Based on the provided text, there is no information about the President of The United States in 1950.”

The future

Now that we’ve built an LLM to help Apple users make purchasing decisions, we need to create a front-facing view with chat functionality to make the LLM accessible to users. The process for building this LLM has proven that although AI tools like ChatGPT are invaluable for everyday use, having a dedicated LLM that you can update with the latest data provides more accurate and specific information for users and empowers them to make decisions knowing that they’ve got the latest information on the relevant subject. We look forward to making this LLM accessible to all users and will provide an update when it’s launched!