Share This Article

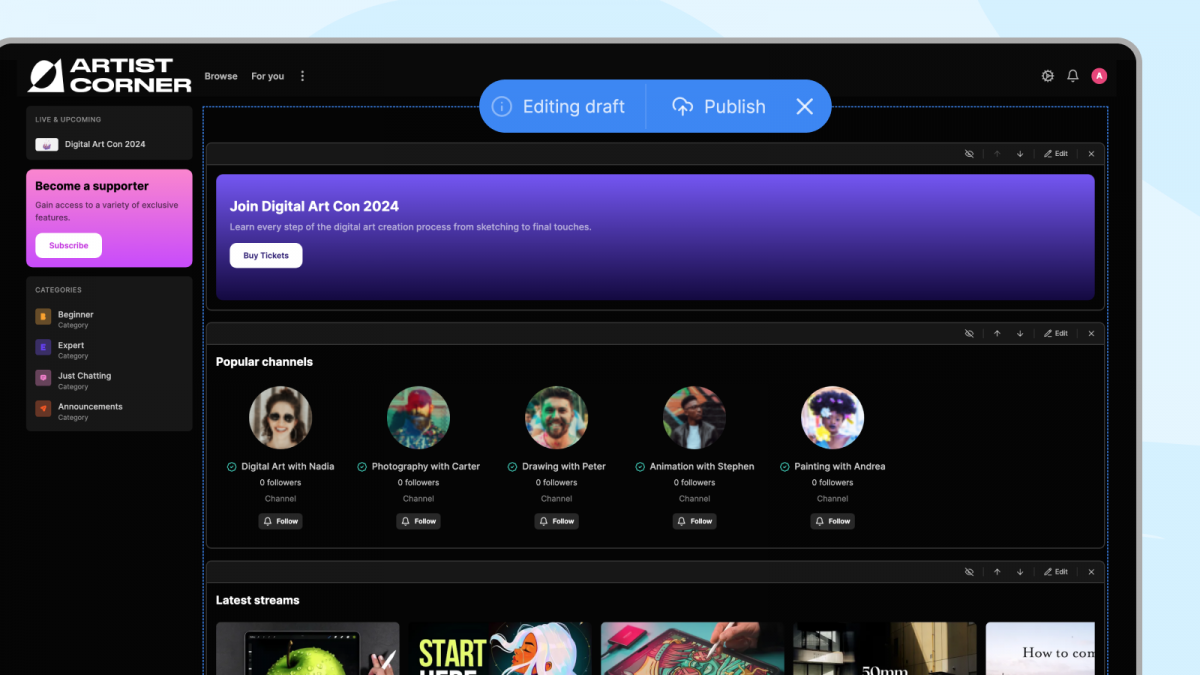

For the last few weeks, we partnered with a large live events platform to develop a white-label experience where networks of content creators can combine together to provide more value to their users. Below, we’ll share insights and practical strategies to tackle common obstacles in modern web development, particularly in large-scale, self-hosted environments.

Request waterfalls

We originally developed the project on Next.js Version 11 and back then each page had to have its own server-side data fetching function that had us jumping through various hoops to abstract data-fetching across a large number of routes.

With server-components and streaming server queries, we reduced TTFB and minimized request waterfalls.

Here’s a contrived example:

Legacy architecture:

Request

-> [server,cached] fetch id from slug

-> serve HTML

-> [client] download and parse JS

-> [client] fetch dynamic content using id

-> Render dynamic content

Streamed server queries:

Request

-> [server,cached] fetch id from slug

-> serve HTML

-> [server] asynchronously fetch dynamic content using id

-> [client] download and parse JS (while query is streaming from server)

-> Render dynamic content

The difference is hugely amplified by the size of the waterfall and user’s network conditions. On slow connection, multiple round-trip queries are vastly faster.

Slow TTFB due to dynamic user-based content

With Next.js’ app-router, each segment (page/layout) can define its own rendering strategy. Next.js also infers this from your usage of dynamic functions like cookies() & headers().

Our problem was that the pages can change dramatically based on the user’s access-level. But if we used cookies on the server the entire route would become dynamic (i.e. react renders on each and every request – no full route cache available). This made full-route caching impossible for guests when all guests will see the same thing.

This was solved by creating hidden “guest-only” pages for hot routes and marking them “force-static”. Then we’d check for the presence of token cookies in middleware and rewrite the path for guests to show the hidden guest-only page which would be in the full route cache, reducing both TTFB and server-load significantly for guest visitors.

Req / ->

? Has auth cookie -> next()

? No auth cookie -> rewrite(staticEquivalent)

This solution doesn’t allow CDN caching of HTML, but we couldn’t do that in the first place due to highly dynamic content.

Version skew

This is a common problem with single-page applications where client-side navigation requires downloading chunks from the server. On self-hosted setups, this becomes a much harder problem to solve since you can’t keep the old files around and some users may have a tab open from 2-20 weeks ago.

- We use our websocket notifier service to forward logs from CI to notify users of new update.

- Client-side links perform some logic on soft navigation to check if it’ll break the page.

Runtime page builder with n components

The primary issue here was being able to resolve the list of components used on the page and only shipping client-side code for components that needed it and were, in fact, being used.

We solved this by leveraging React’s new server-components model

- Server-only rendering allows many components to only ship rendered strings, avoiding their dependency tree from ever reaching the client.

- Request waterfalls were eliminated by caching top-level requests on the server where cache could be invalidated immediately on-demand.

- Server-side request caching meant that highly interactive components that did require client-side code immediately had the data required to start subsequent fetches.

Project wide type-checking

This is not specific to Next.js but generally you have to run project-wide type-checking as a command, by default editors only show Typescript errors within open files.

We modularize our codebase using Nx packages and each package has its own tsconfig. We used a Typescript project references pipeline that let us run Typescript on all project files incrementally. This, combined with a VSCode problem-matcher, gives us a useful “problems” tab that shows errors even from unopened files.

Slow deployment pipeline

As dependencies (especially huge devDependencies like Cypress) and project code grows, several issues come up in the deployment pipeline.

- Slow builds – Next.js is notorious for slow builds and their solution is still a work-in-progress because they decided to essentially rewrite Webpack using Rust for an unknown reason.

- This is hard to get around but using an external build system (like Nx) you can separate frontend code into smaller bundles that can be built separately and cached by Nx. Effectively like using a pre-compiled npm package – this is a cumbersome setup but worth it in huge projects

- Gigantic docker images – our deployment pipeline involves building docker images to push to AWS ECR. At some point our builds started exceeding 2 GB total size so we made several changes:

- Use node-alpine as base.

- Configure next to produce a “standalone” build and use shell scripts to move around static assets and dependencies into one standalone directory.

- Dependencies that cannot be traced during nextjs build are separately copied into final dependencies.

- Result: final image was well under 500 MB (including static assets).

Distributed caching in self-hosted clusters

Until a recent version, Next.js did not offer a custom cache-handler API. On a self-hosted environment, this meant that `revalidate` functions only revalidated the in-memory cache of a single instance – this was a big problem.

We wrote a custom redis cache handler that was later ported to the new API.

Frontend multi-tenancy

We needed to serve pages for various clients, each having their own subdomain or a custom domain. We used Next.js middleware to resolve domains to client identifiers and rewrite to a dynamic route (`/[client]/…`). We then wrapped internal router hooks and banned using them internally using lint rules. This allows developers to use the regular methods of manipulating router state while the rewrites and client-resolution is handled transparently.

Long-running chat with history

We needed our chat component to allow scrolling back to the start. This required a virtualized chat that would lazily load more messages without keeping all the DOM nodes around.

The component had to maintain a coherent timeline of messages with messages coming in over websockets, from user and upon loading history.

- Render n messages based on viewport size.

- Keep a rendered buffer of messages based on scroll behavior.

- Maintain a coherent timeline of messages with messages coming in over sockets, post by user and upon loading history.

- Optimistically append messages when posted by user (and allow reverting on failed commit).

- Load older/newer messages as user approaches the end of messages pre-loaded in memory.

Nextjs limitations & drawbacks

- HTTP req and res objects are not exposed.

- This makes complex logging and custom headers hard to impossible.

- Headers can be set from Next.config.js but they never let you set your own cache-control header.

- Bundling is largely controlled by Next and very different from the rest of the ecosystem which has adopted Vite as the de-facto bundler. You have to use webpack API within next.config to achieve basic things like custom process.env string replacements

- Self-hosting poses several challenges (explained above).

- Middleware is always “edge” runtime. Node dependencies cannot be used even if you’re running on Node – Vercel doesn’t seem to have plans to change this.

- You’ll need to find edge/workers-compatible libraries for basic things like JWT handling because popular node libraries use node: Crypto APIs.